What’s your favorite way to learn?

Skill-Building, OpenAI DevDay, and Creativity in the Latent Space

What I’m covering in this newsletter:

What’s your favorite way to learn? I’ve been exploring new methods to skill build.

OpenAI DevDay is in a week! I’ll share some idea prompts to explore opportunities when the new tools come out.

I’m pretty amped about ideas exploring the latent space. Highlighting a couple talks from the recent AI Engineering Summit.

Also Happy Halloween!! 🎃👻🍬

🛠️ Reflections on learning and making

I’ve been focusing on improving my skills around software development, specifically around design and engineering. Thinking of them as crafts. I worked through a few tutorials but found that most of the material was familiar, or the process felt like it was dragging. A buddy recommended I go to youtube and watch someone build a project, and then recreate it, understanding it part by part. What I found was an initial disorientation/stress because the material is all new. And then things became familiar.

It makes me think of the fastai teaching strategy, where you build something end to end, tweak it, and get familiar through playing with it. And then you go in the details. I like that. The video I chose was Let’s Build GPT from Scratch. It’s amazing, and about 30m in I was over my head, but I just kept going. It requires some knowledge on neural nets and pytorch shared earlier in the video series. Curious how it’ll feel to rewatch this after watching the earlier videos. That sense of progress is exhilarating.

👉 Have you found ways you like to learn? I’d love to hear about it!

💡 Heading to OpenAI DevDay

OpenAI’s first developer conference is on Monday:

The one-day event will bring hundreds of developers from around the world together with the team at OpenAI to preview new tools and exchange ideas. In-person attendees will also be able to join breakout sessions led by members of OpenAI’s technical staff.

I’m curious what the new tools will be. What we’ll see will inspire a whole new range of apps. My hunch is that one of the tools will be an API for GPT4-V, giving people access to image-based reasoning in their apps. At the AI Engineer Summit earlier this month OpenAI shared a couple demos focusing on the vision capabilities of GPT4-V:

Take a photo. AI generates a description of it, and then pipes that to DALLE3 to make an image. And then you can ask the AI to compare the two images and spot the differences. Then use that to generate another image.

Given a video, you can use AI to generate a blog post of it using video stills and the video transcription.

The spot the differences struck me. That’s not intuitive, and I’m curious of other creative possibilities that will only become clear after making. I built a prototype with image-based reasoning to see what ideas begin to spark.

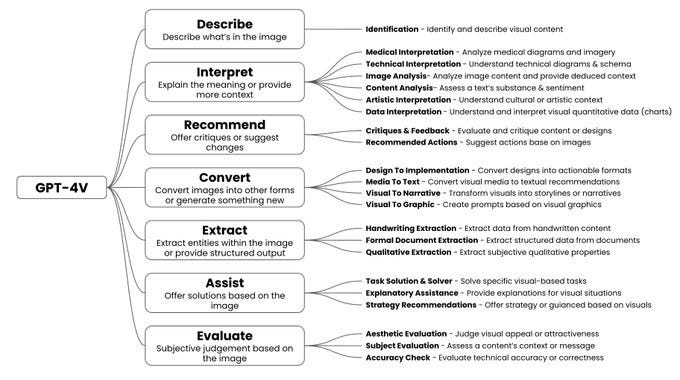

The vision capability is currently available for some users in ChatGPT Plus, and there’s a bunch of interesting explorations on Twitter. I love this overview of use cases by Greg Kamradt:

For me some of the more compelling use cases are Interpret, Convert, and Extract. For Convert, specifically thinking of the examples that turn some image into code, because that opens up a new way of working with the media.

I’m most interested in how we create new UX’s on top of powerful AI’s. From Linus Lee, one approach is to consider context, intent, and action. What is the surrounding context, the user intent, and the resulting LLM action? Often LLMs exist as a separate box from apps themselves, what would it be like to include them right in the context where you are working? How might we move beyond a chat on the side of an app, to an interface that is more integrated?

🌌 On my mind: The latent space!

The latent space feels like some rich territory to play with AI × UX. You can use an embedding to represent some image or text as a vector, and then find other ones similar to it, do math on them to tweak the output in different ways. It’s a pretty wild creative exercise.

I found this exploration by Amelia Wattenberger to be the clearest introduction to using the latent space as a creative tool. This is my favorite kind of demo — you see it and all these other ideas come to mind! I also recommend her talk at the AI Engineering Summit, which has several inspiring demos exploring interfaces for AI and frameworks for thinking about this space.

Linus Lee shares a few demos and introduces Contra in his talk from the AI Engineering Summit. For the image demo he shows panning from a cartoon of himself to a photo, and all of the images in-between the two. For text, there’s this fascinating demo where he finds the vector corresponding to length and moves across that dimension to adjust the text length.

Thanks for reading!

📚 Check out my AI Resources list. I made this list for myself to stay up to date on AI things and organize resources I find helpful.

📞 Book an unoffice hours conversation: We could talk about something you’re working on, jam on possibilities for collaboration, share past experiences and stories, draw together / make a zine, or meditate.

🙌 Follow what I’m up to by subscribing here and see my AI projects here. If you know anyone that would find this post interesting, I’d really appreciate it if you forward it to them! And if you’d like to jam more on any of this, I’d love to chat here or on twitter.