What would you make, now that your apps can see and reason?

Image-based reasoning, a collection of AI resources, launching unoffice hours

These past few weeks I’ve been getting settled Oakland. After a couple years in Maryland, it’s great to be back in the bay. I’ve found that for me it’s so much easier to go deep and get creative when I have a routine (and a desk!). So I dusted off my AI projects from earlier in the year and set out to work on new prototypes too.

TLDR;

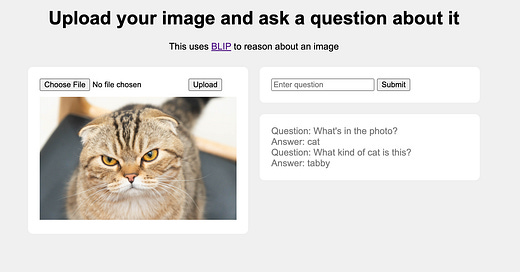

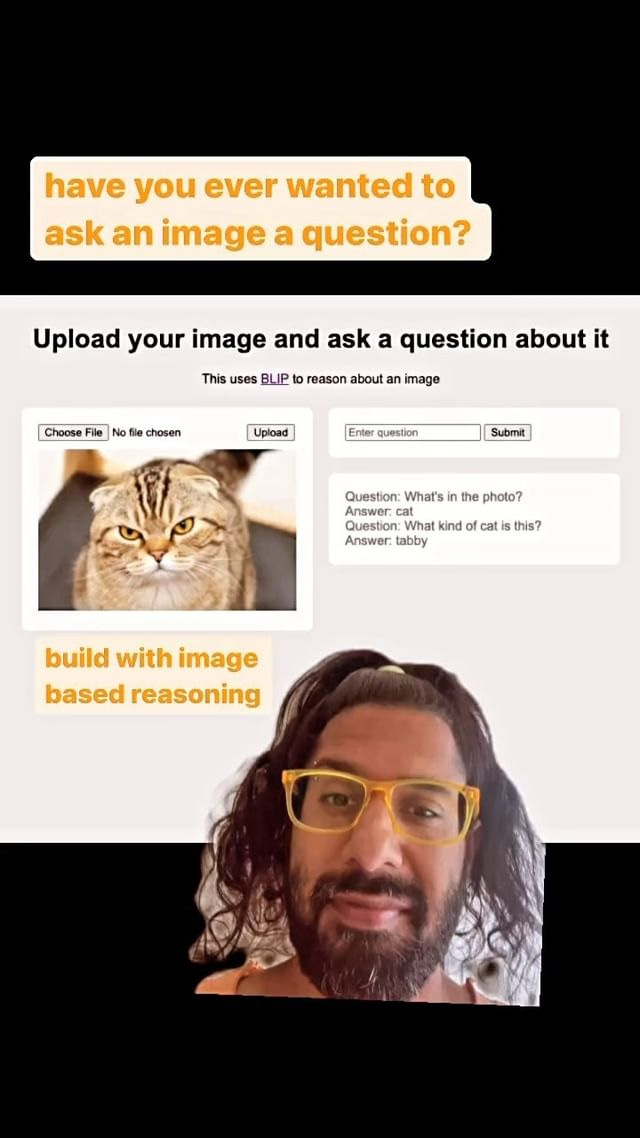

Have you ever wanted to ask an image a question? BLIP-play is a prototype that answers your questions about an image. Upload an image, and ask a question. It uses the BLIP model via Replicate. Demo video.

Where do you go when you’re looking to ramp up and stay up to date on the AI space? Check out AI Resources where I list helpful sources.

For more serendipity and inspiring conversations, I’m opening up my weekly calendar for unoffice hours! Book a 30m slot. Check out the trailer.

An Ask: I’m exploring full time roles in this space, and currently having informational interviews. Know someone I should meet? Let’s chat! I’d love to meet with folks in SF/Oakland. See more about what I’m looking for.

LFBuild 🚀🚀🚀 : Building with image based reasoning

A few weeks ago GPT-4V launched on ChatGPT plus allowing users to begin to get a feel for what image-based reasoning feels like. Here’s a great overview of use cases people are finding. Common use cases include interpreting the meaning of images, converting the images to other formats (like code!), and extracting data from images (like your handwritten notes in a journal).

I think it may be some time before we really understand what image based reasoning means, and what it feels like. I wanted to get a sense of what it’s like to build with it, and found the BLIP model on replicate. This model can caption images and also answer questions about an image. What would it be like to have a Q&A chat with an image?

I used GPT-4 to get started and made a simple python app to upload the image and send questions to the model. The questions and answers are listed alongside the model, chat style.

The answers are super curt it’s kind of hilarious. In a future iteration I could combine this with gpt-3.5 to make it more conversational.

How would you riff on this? What would you want to make with image based reasoning? What would be possible as it improves? Check out a video demo of this project, and find the code here.

AI resources 📚 to ramp up and stay up to date

As a maker in the AI space, how might I stay up to date and keep learning with the latest info, tools, and techniques? It can be intimidating.

I made this doc for myself to get back up to date on AI things and organize resources I find helpful. The 🌊 Feed is a way to find the latest, and the 📚Courses are deep dives. I’m most interested in the MLOps side to make cool projects. If there's been other helpful resources you check regularly or refer to you'd recommend to check out, I’d love to know!

👉 Check out my AI Resources list

Let’s hang! Launching Unoffice Hours

I’m interested in more serendipity, especially as I think about what’s next, so I’m starting weekly unoffice hours. We could talk about something you’re working on, jam on possibilities for collaboration, share past experiences and stories, draw together / make a zine, or meditate.

Check out the trailer:

Unoffice hours is inspired by Matt Webb, and introduced to me by Jonah Goldsaito.

What’s Next

Continuing self-study to improve my programming and design skills, and looking to build another AI prototype before the end of the month.

Planning a creatives happy hour next week in SF. I’d love this to be a regular informal gathering of makers.

I’ll be attending OpenAI DevDay in a few weeks.

Thanks for reading! You can follow what I’m up to by subscribing here and see my AI projects here. If you know anyone that would find this post interesting, I’d really appreciate it if you forward it to them! And if you’d like to jam more on any of this, I’d love to chat here or on twitter.