This newsletter covers what I’m building and learning in creative AI. Today’s updates:

🚀 New prototypes exploring the future of websites with Upstatement

💡 Giving AI talks and workshops: “Shaping AI with Playful Futures”

✨ Livecoding with a quartet!

🌀 wonder zone 🌀 Lately I’ve been wondering about toys and LLMs. Or expanding it to playable art and games. Things like fidget toys. What might LLM-native games look like? How might I make LLM-materials that encourage play? I’m thinking of things like legos and play doh — things that just ignite the imagination.

Lots of projects from the past month, let’s get into it 🙌

Exploring the future of websites with AI

Companies see a world changing with AI. They see value being created and wonder, What should I do right now? How can I leverage this in my digital presence?

In January, I joined Upstatement to explore ideas and build prototypes as an AI Experience Research Engineer. What I’ve enjoyed most is working closely with experts in design, branding, product in the space of websites who also draw from insights working with clients. A deep understanding of client problems makes the work much more motivating. I see a lot of opportunity around AI for websites, and I’m amped to be building it with this team.

👉 Check out a recap of this work from my post on the Upstatement blog “How we’re imagining the future of websites.”

Here are three prototypes from the last month:

Turn images into words. I use GPT-4V to describe an image with increasing levels of detail, and used these prompts with a range of images: a photo, a diagram, and a painting. The descriptions not only get into what’s happening in the image, they also interpret aspects of the layout and the form, and even the artistic style. You can play with the prototype here.

Spatially browse a website with AI. I made a visualization of the Upstatement blog where each post is a point. The posts are grouped by similarity because they’re spatially arranged based on their embeddings. When you hover over a node you can see the title, and also an AI-generated summary of the post. When you click a node, it will open up the article to read. I expand on this prototype for the next one, prototype link below.

AI-generated topics and categories for a website. Jumping off the last prototype, I used an LLM to read all of the blog posts and then label them by topics and categories. I integrated this into the visualization by using the category to determine the node color, and adding the topics and category info to the article preview. You can explore the prototype here.

How might these demos inform the future of websites?

👉 More implementation details and use cases in the blog post, check it out: “How we’re imagining the future of websites.”

Using play to see new AI possibilities

I gave a talk “Shaping AI with Playful Futures” at the first Agape AI salon:

When I think about making AI things, I like to begin with the question: what futures would you like to see? And then create demos to show realities towards that. What would a future look like with more care, collaboration, creativity, agency, and play?

You can see the intro to my talk here, full recording should be available soon.

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

I’ve started to share my work in AI talks, both meetups and internal. If you’re interested in talks and workshops, get in touch!

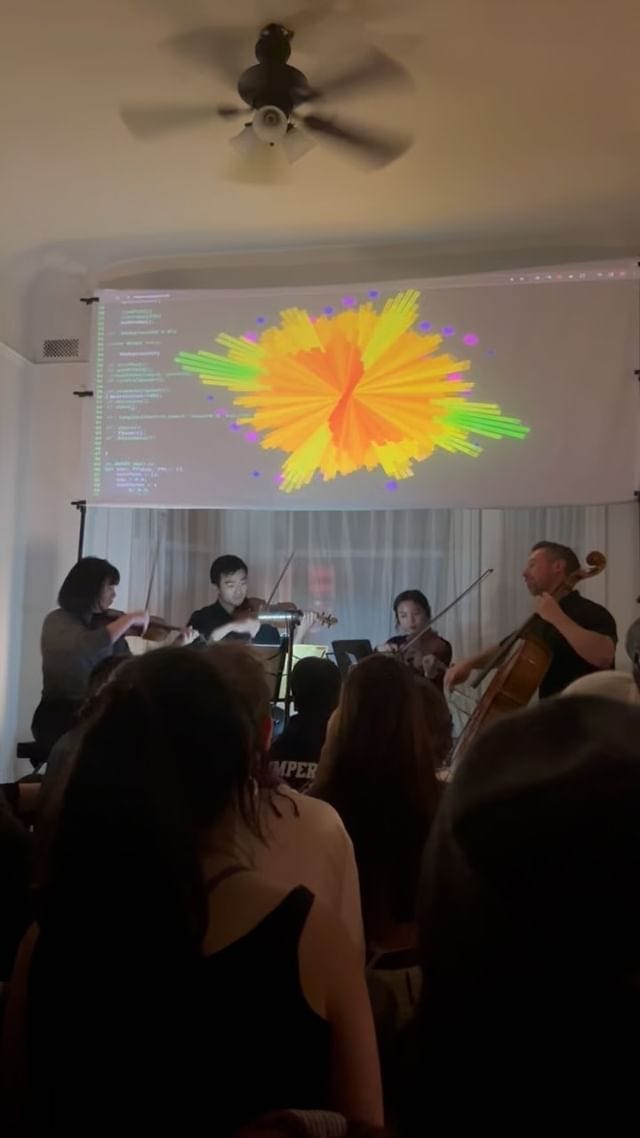

Livecoding with a Quartet

My big creative challenge this past month was creating a visual piece alongside a live performance of Sergei Prokofiev's String Quartet No. 2. I wanted the visuals to be tightly coupled to the sound, so I started with audio reactive elements and sketched patterns around them.

I listened to the piece over and over and coded. It was intense! Gradually a set came together I performed live by mixing the patterns and then modulating them, using p5Live.

I love the beginning of the composition, it’s enormous, it’s spectacular. What a way to start. I wanted visuals that could mirror this. From nothing, to everything. I used an audio reactive burst like pattern. When there is silence, it’s a small dot, and when there is sound, it blooms open based on the volume across frequencies. You can see it here:

Thanks for reading!

🙌 Follow what I’m up to by subscribing here and see my AI projects here. If you know anyone that would find this post interesting, I’d really appreciate it if you forward it to them! And if you’d like to jam more on any of this, I’d love to chat here or on twitter.

📞 Book an unoffice hours conversation: We could talk about something you’re working on, jam on possibilities for collaboration, share past experiences and stories, draw together / make a zine, or meditate.

📚 Check out my AI Resources list. I made this list for myself to stay up to date on AI things and organize resources I find helpful.