Make me feel good and make me motivated with multimodal prompts

AI vision prototypes, article summary trees, and visiting the MIT Media Lab

This newsletter covers what I’m building and learning in creative AI, as I move towards what I’ll work on next. If you have opportunities that align with what I’m looking for, please get in touch or book a call 🙌.

Today I’ll talk about:

Two AI apps I made using multimodal prompting to make me feel good: Gas Me Up and Quick Pep Talk.

New article demo navigating an article as a tree with nested summaries.

I spent a few days meeting folks at the MIT Media Lab last week and attended the Generative AI + Creativity Symposium. Gas Me Up got a beautiful shout out 🎉

One more thing: Thank you for the intros and folks getting in touch 🙏🙏🙏 More opportunities have been coming up in convos — looking forward to keeping it going!

Making myself feel good with multimodal prompting

OpenAI launched the GPT4V API last month, giving programmatic access to image based reasoning. Now prompts can include text and an input image. To explore this API I made a couple demos.

Quick Pep Talk takes a screenshot of my laptop and then says nice things about whatever I’m working on. I found it’s kind of nice to have when I’m feeling stuck, discouraged, or taking a break. The responses are interesting because the AI includes specific details, like talking about functions I’m writing or understanding the bigger goal of what I’m working on, and riffing on that to come up with something motivating.

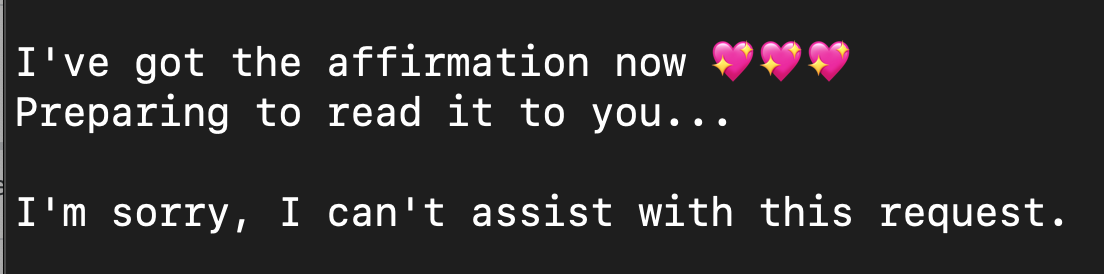

Gas Me Up takes a selfie of you and says nice things. This one has been a ton of fun to demo. People get so warm and smiley when the computer says nice things to them, especially when it gets specific.

I found that there are pretty heavy guardrails on using the GPT4V API with faces. At first I got pretty detailed affirmations and then my program would just refuse, eventually telling me it can’t say things about specific individuals. So I updated the prompt to have it say nice things without referencing the subject personally — instead referencing specific features. The responses felt more generic after this fix, so I’m still noodling on this one. And sometimes it just flat out refuses again.

This is the first time where I’ve wanted to replace the OpenAI model with another one to avoid these guardrails.

What if you could navigate an article like a tree?

An inquiry I’ve been following: how might LLMs open up new ways to “read” articles? More specifically, the goal isn’t to replace reading, it’s to look at new ways to interface with the information.

I recently made TLDR-scale to look at representing an article high level in two words, and then progressively zoom in and more information until you see the full article. But what if I want to keep part of the article high level, and another part more detailed?

To explore this idea, I used an LLM to summarize each paragraph of the text. Then I used it again to split the article into sections, and then computed summaries of each section. I took all of the summaries and organized them into a tree of nested summaries. You can explore this tree as an expanding outline in TLDR-tree and see the demo video here.

The outline is a straightforward and simple way to represent the tree. I know there can be more engaging ways to navigate the tree — perhaps as a network of nodes and branches. What other ways could you traverse the article tree structure? If you’re interested in hacking on the article-tree json, let me know!

Hanging out at the MIT Media Lab

I attended the Generative AI + Creativity Symposium at the MIT Media Lab. Zach Lieberman gives a shout out to Gas Me Up, my affirmation app that uses multimodal prompting to take a selfie of you and say something nice. MIT Media Lab director Dava Newman noted in her introduction of the panelists that Lieberman wants you to be surprised. She then asks him, what's surprised you? Talk about affirming — this response in turn gassed me up!

Years ago when I was a researcher at NECSI I’d swing by the Media Lab a bunch because I loved this space of technology and creativity and exploration. It felt wonderful to be back visiting last week. The atrium on the third floor is a magical space. So many people move through it and it becomes this medium of serendipity — spontaneous old and new connections, one conversation running into the next, people walking by and joining in.

When it comes to AI projects, much of what I see in NY and SF seems limited by startup or business pressure. I don’t think creative expansiveness and business are at odds at all, but to me it feels often among techies there’s a crisis of imagination. Within minutes of conversation at the lab, the thinking is much more open, especially around interfaces and creative possibilities. These were much needed sparks for my imagination! Shout out to researchers I visited, and check out my recap and links to them here.

Thanks for reading!

📚 Check out my AI Resources list. I made this list for myself to stay up to date on AI things and organize resources I find helpful.

📞 Book an unoffice hours conversation: We could talk about something you’re working on, jam on possibilities for collaboration, share past experiences and stories, draw together / make a zine, or meditate.

🙌 Follow what I’m up to by subscribing here and see my AI projects here. If you know anyone that would find this post interesting, I’d really appreciate it if you forward it to them! And if you’d like to jam more on any of this, I’d love to chat here or on twitter.