Imagining new interfaces for websites with Retrieval Augmented Generation (RAG)

... plus! a creative gathering in Austin, jamming on AI + play, and more dancy livecoding

Join in with what I’m building and learning in creative AI ⚡ in this post I’ll talk about:

🚀 Prototypes with Upstatement exploring new ways to browse websites with AI

💡 A talk and brainstorm on AI & play at IDEO

✨ DJ and livecoding collabs with HELLADEEP.

⭐ This Thursday, 3/28 I’m throwing an event in Austin. It’s the weekly drink & draw & hang I throw in Oakland, only this time in Austin! I’ll be there with a few of the Oakland organizers. If you’re in the area, swing by :) Or fwd to buds nearby. It’s a space to gather and share and doodle and dream and giggle.

I’m going to take the next few days off as Q12024 comes to a close and wanted to share an update before I head out. Feeling super grateful for the adventures, projects, and collaborations this quarter 🥰 Thanks as always for reading — the responses, conversations, and unoffice calls have been incredibly motivating. If you’re interested in working together on creative AI projects, my contract work with Upstatement wraps up at the end of April — so I’ll be open for projects and consulting. Until then, I’m excited for what I’ll be working on with them and for you to see when it’s live 🙌

🌀 wonder zone 🌀 I’ve been thinking of interactive and collaborative creative experiences with AI, drawing from my past work in interactive and public art. The first time I felt this with genAI tools was when the near real-time LCM models came out. I made this prototype to imagine creating together around a table. What tools/models would be useful here? What projects come to mind?

🧠 Browsing websites with RAG

My work this past month as an AI Experience Research Engineer at Upstatement involved diving into new ways to search and browse websites. Think about when it’s been hard to find what you’re looking for on a website. The technology of retrieval augmented generation (RAG) has been gaining popularity, and for good reason. It gives us the ability to easily share data with LLMs without re-training or fine-tuning them, and can keep them up-to-date and more factual.

So how might we better browse and find what we’re looking for, especially in information dense websites? RAG can be a way. To make this exploration more concrete, I looked at a very specific example of navigating a directory. You can see the HBS faculty directory here. At the moment, you can navigate it by clicking a professors page, or using the filters in the sidebar. But what if you want to browse across all the professors and find those who’s research would be most useful for you?

In my first prototype I gave an LLM access to the directory information by indexing the directory pages with LlamaIndex, and connecting it to an LLM using RAG. Check out the first demo video here for more information:

You can see how new use cases open up. It’s like having the information meet you where you’re at. Based on what I need, RAG will surface the most relevant information from the directory. Say I’m prototyping AI projects, whose work should I check out? Now I can find out. If you’re curious how it was made, check out this second video. It’s gets into the specifics of finding the data and the LlamaIndex features that were most helpful.

Finally, an ongoing thread you may notice from my work with LLMs is that I’m interested in interfaces that go beyond chat. The challenge with AI chat is that it’s hard to tell what the tool knows and what it’s good at. You kind of have to use it a bunch to get a feel for it. This next prototype seeks to improve on the RAG chat interface using features inspired by the concepts of guidance and refinement:

Guidance helps introduce people to the tool. In this case it shows personas and use cases. This gives a place to start, and then there is an open-ended input to expand from there. Refinement gives options to further explore a tool. Here we use an LLM to look at the chat responses to generate three follow up questions that would be useful. Guidance and refinement are ways to augment open-ended AI interfaces.

There’s so much more to do here, and we’re just getting started. What happens when LLMs meet websites, and how might this new technology make websites more effective in completing their Jobs To Be Done? Stay tuned for more explorations on LLM-powered website features with Upstatement in this coming month.

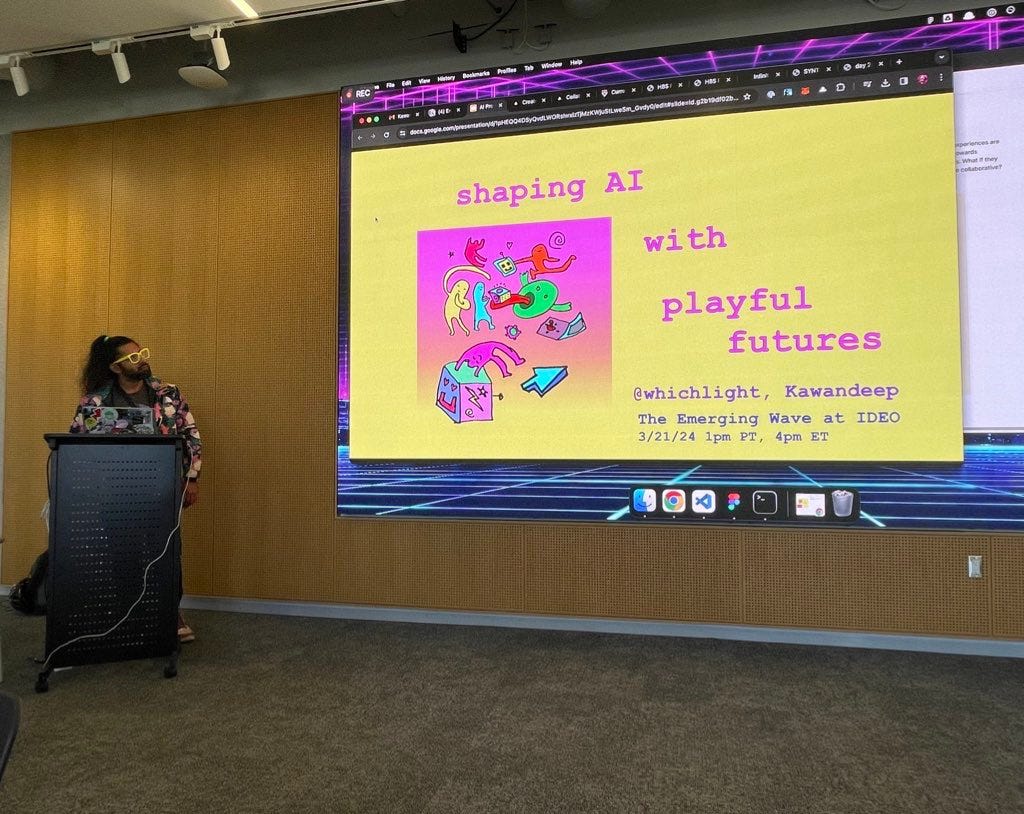

Speaking at The Emerging Wave series at IDEO

I gave a talk and facilitated a brainstorm at IDEO on play and AI. I was super excited to give this talk with IDEO’s Play Lab, so I began with a few visual and sonic webtoys that we played with together, then shared my demos, and finally at the end took all of these ideas and playful moments and launched into a brainstorm.

The brainstorm jumped off of a few inquiries in my last newsletter on LLM-native playful experiences. This is what we jammed on:

What do LLM-native playful experiences look like?

What if creating with LLMs was as intuitive and expressive as Play-Doh and Lego?

Many AI experiences are directed towards individuals. What if they were more collaborative?

Here’s a couple idea-sparks that came out of the brainstorm to explore LLM-native play in projects. Start with:

Some basic, discrete unit — an interface of modules to combine and move around like blocks. And then when you combine them there’s some interactions between components that are in part LLM-driven.

Interactions that are quick, and if possible, real-time.

What comes to mind?

If this sounds interesting to you, I’ve started to share more about my work, and am open to speaking or workshops at meetups, conferences, and internal talks.

💥 DJ + livecoding with HELLADEEP

Soon after my livecode visuals performance with a quartet, I performed with HELLADEEP at Eli’s Mile High Club in Oakland for my bday event 222fest. With all of the practice ahead of the quartet show, I felt so comfortable improvising and trying out different patterns in p5live. The whole show flowed and felt wonderful. Instead of going for 30 minutes as I originally imagined, I went for the hour. You can see clips of the show here:

Thanks for reading!

🙌 Follow what I’m up to by subscribing here and see my AI projects here. If you know anyone that would find this post interesting, I’d really appreciate it if you forward it to them! And if you’d like to jam more on any of this, I’d love to chat here or on twitter.

📚 Check out my AI Resources list. I made this list for myself to stay up to date on AI things and organize resources I find helpful.

🤭 I made a book. It’s called “Feeling Great About My Butt,” and is a book of illustrations and words that find ways to make space for feelings of whimsy, devastation, and growth.

📞 Book an unoffice hours conversation: We could talk about something you’re working on, jam on possibilities for collaboration, share past experiences and stories, draw together / make a zine, or meditate.