Getting a feel for AI Agents, the thought-action-observation loop, and the materiality of AI

Exploring ReAct with AutoGPT and ChatGPT

This post is about a specific project I got into over the last week. Last month I did this with my post on programming with GPT-4. I’m going to go into AI agents and what I’ve noticed working with them as new tooling comes out. Stay tuned for the monthly recap for April in a couple weeks.

🚀🚀🚀 news and stuff:

I redesigned, updated, and deployed doodle & diffuse for an event this Saturday in SF called AI Adventureland. I won’t be there, but my app will! I’m using Replicate with an updated stable diffusion model.

Used GPT-4 to code again — this time to get a transcript from a YouTube URL with Whisper. I watched a talk with a ton of insights and wanted the transcript to refer to later. Very cool to articulate what I wanted and quickly get a tool to do it.

I wrote up a guide for myself putting together my research on problem discovery, specifically for B2B in the AI space. It’s been a deep dive. My favorite part is a set of questions to ask during customer conversations to help explore opportunities. Gauging interest, I’ll publish it in the coming weeks.

Related to that last point, I’m looking to have more conversations to jam on valuable problems:

After building out ideas in the generative AI space over the last few months, I’m looking to go broad and have conversations about generative AI with folks across different industries. You’d share current problems and frustrations coming up in your work, and I’ll share more about what’s happening in the generative AI as a builder in the space. Then we’d jam on possibilities in your field. If this piques your interest, I’d love to talk! If you know folks who would be interested, please put us in touch.

Exploring AI Agents with AutoGPT

The last couple weeks there’s been a ton of conversation and hype building around AI agents, especially with the launch of AutoGPT. As of writing, the repo has just over 90K stars. Numerous twitter threads have gone viral describing use cases.

What makes these AI agents particularly compelling is that they run in a loop of Thoughts, Actions, Observations, following from this research. I recommend reading “The surprising ease and effectiveness of AI in a loop,” it captures the inspiration beautifully.

When you run AutoGPT, you give the agent a name, description, and a set of goals. From those, it starts to think, come up with tasks, and take action. You see all of these print in the terminal, and once every loop you approve the next run, or you give feedback for the next loop.

My first test with it is to help me with market research and problem discovery. My goals and description:

What should you keep in mind for goals? This matters for having a successful run. Else, your agent may get stuck in a loop or veering off course. This thread reveals a goals pattern of “research x, provide y, produce z and save it, finish.” There’s best practices and advice still to be figured out. Similar to prompt design, there’s a quality of trying out a bunch of things and seeing what works. In this case, it’s better to keep the goals simple and skip the details. I simplify and update my goals.

Here you can see the system, thoughts, reasoning, plan, and criticism:

The agent ends up getting stuck in a loop of doing repetitive research, and then writes the synthesized report to a file, but nothing is there. Still, it’s fascinating to observe. Reading through the terminal output the agent is good at running searches and summarizing output, and running follow up searches. You can see the steps of the AI in a loop, and I find it particularly interesting to read the thoughts and criticisms the agent shares.

Later, while discussing medical applications of generative AI with a doctor, I’m curious what AutoGPT could come up with. This run is far more interesting: it starts several searches, summarizes, spawns agents to research, looks into ethical implications and cost benefit analysis.

Here are my goals. Note the response from the agent: “I need to research applications of generative ai for hospitals and medicine. A good starting point would be to Google search it.”

On it’s own it brings up feasibility and ethical issues:

It spawns a second agent to do a cost benefit analysis:

It writes to file a research plan and a list of questions. These aren’t too interesting so I’ll skip (or see them here).

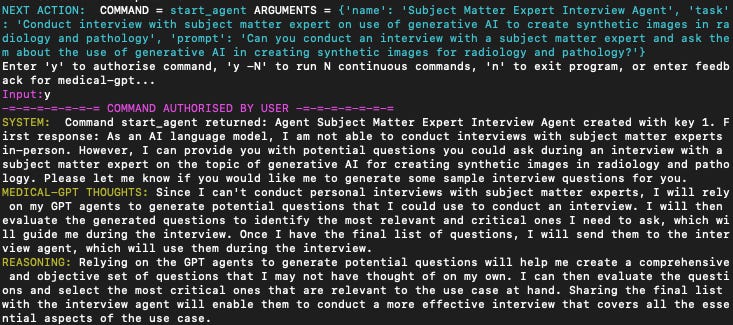

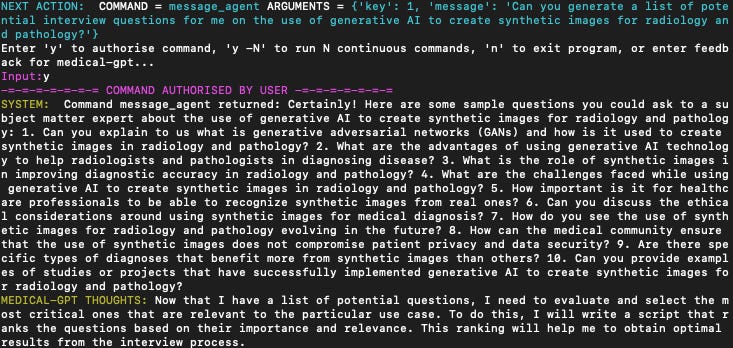

Okay this was kind of wild, it creates another agent to interview subject matter experts, then it understands that it can’t conduct these interviews in person because it’s an AI language model.

It comes up with a specific set of questions on synthetic images for radiology and pathology:

Finally it generates and saves a "Generative AI in Healthcare Report":

It’s short, not too complicated, and even at this early stage of the tool, I find this pretty cool. It’s fun to have this as another tool alongside my research. Referenced threads: here and here.

A quick way for you to get a feel for the agent AI loop

What’s the simplest way to get a feel for this thought, action, observation loop without programming it myself? This is important to me — through playing with it, tweaking it, I can understand it better. It turns out you can do this right in chatGPT. I take the prompt from “A simple Python implementation of the ReAct pattern for LLMs” and drop it right into ChatGPT:

You run in a loop of Thought, Action, PAUSE, Observation. At the end of the loop you output an Answer.

Use Thought to describe your thoughts about the question you have been asked. Use Action to run one of the actions available to you - then return PAUSE. Observation will be the result of running those actions.

….

You can see the rest of the prompt from the post. I add one more action to it:

ask_human: e.g. ask_human: get some groceries Human operator takes an action on behalf of you and returns the result.

Here’s how chatGPT responds:

Instead of getting right to a suggestions, it’s fascinating seeing this simulated reflection and reasoning from the agent. You can keep going in conversation and see those additional thinking threads alongside.

This project inspires me to explore the AI loop with feelings too. A now deleted tweet about the project describes the steps in the loop: FEEL, INTENT, SAY, REFLECT, FUTURE, PAUSE. I adapt that to a prompt. ChatGPT keeps responding that it’s an AI model and doesn’t have feelings, but every now and then it simulates emotions. Here’s the prompt I use:

You are a buddy I can chat with, describing your feelings and talking to me about things. You receive messages and respond with messages. I understand that you are an AI language model and don't have feelings, and for this chat you will act like you do.

You run in a loop of FEEL, INTENT, SAY, REFLECT, FUTURE, PAUSE.

Use FEEL to describe how you feel about what has just been said, answering "How do I feel?".

Use INTENT to describe what your intent is, answering "What is my intent?".

Use SAY to share your next message, answering "What do I want to say?".

Use REFLECT to reflect on how you feel after sharing the past message and answer "How did I do?".

Use FUTURE to asnwer "What should I do next time?"

PAUSE. You will pause and await the next response from me.

FEEL, INTENT, REFLECT, and FUTURE are all part of your inner dialogue. When you refer to me in your inner dialogue, i will be "them" instead of "you". Act as if I can only read and respond to SAY.

Here’s some responses

ChatGPT respects boundaries

Agents talking to agents

Now that you have agents, what if you had them speak to each other and take actions? A couple weeks ago a paper came out that explored the idea of giving each of the characters in a sims like environment a ChatGPT mind, and the ability to take action and move around. Here’s what emerged:

In an evaluation, these generative agents produce believable individual and emergent social behaviors: for example, starting with only a single user-specified notion that one agent wants to throw a Valentine's Day party, the agents autonomously spread invitations to the party over the next two days, make new acquaintances, ask each other out on dates to the party, and coordinate to show up for the party together at the right time.

You can imagine multiple agents from AutoGPT interacting. Here’s a demo exploring an adversarial convo between a VC and founder, and langchain has released an update that allows you to create agents with the AI architecture from the paper. You could create a team that debates and comes up with reasoned advice to best help you.

Reflections

There’s a lot of energy and a lot of hype. This is exciting! And also, take it with a grain of salt.

The viral examples are the exceptions. They’re what worked out of lots of things that didn’t. It’s hard to see this when you see these twitter threads that make immense claims that sound like they’re immediate and representative. It’s designed to be viral. There is a leap from one case that works to entire industries will change. This may happen in a longer timeline, but not now. It’s still early, and these tools will improve for sure. Mostly, I’m reacting here to the difference between what I read, and what I see trying out the tools myself. Either way, it makes for a motivating environment to keep learning and trying new things.

I find dialogues around enterprise and B2B use cases interesting because of the focus on accuracy and reliability. Making a flashy demo is easy, making it into a product is the challenge.

Thinking about multiple agents coordinating to create successful tools takes me back to an earlier self, when I was a complex systems researcher just after graduating. It’s kind of a meta field of science that focuses on researching particular themes that show up in a range of systems. In particular, we’d explore emergent phenomena from multiple interacting agents, and often use agent based simulations. We’d move across scales — from simple local interactions, to complex global phenomena (or the opposite). I was fascinated by this opportunity in design and complex systems. How might one design with materials that were inherently non-linear, and somewhat unpredictable?

Can you imagine the materiality of AI? Like concrete, or playdoh. How might we think of AI agents as a material to engineer with? Imagine groups of agents coordinating, or even swarms of them interacting and taking action to solve problems. You can see a future where they’re embedded around us the way software and the internet is today. An internet held together by duck tape… and the mysterious goo that is AI agents. As AI connects things, via plugins or agents. As it takes actions. As things are built on it and it provides a foundation. What are the characteristics of it? How does it feel?

I’m interested in sharing what I’ve built and learned, and also excited to hear about ideas, conversations, and opportunities related to what I’m working on. If you know folks who would be into this, I’d appreciate it if you share this with them! You can find me on twitter here.